I want to say a bit about how the Gamma function can be characterized, because I’m not a huge fan of the ways I’ve seen in print.

Let’s make this quick. I want to know about functions γ such that:

- γ(1) = 1,

- γ satisfies the functional equation* γ(t+1) = t γ(t), and

- γ is a meromorphic function on the complex plane*.

Note that conditions (1) and (2) together mean that γ(n) = (n-1)! for positive integers n.

We know that one such function exists, namely the classical Gamma function. For positive real x we may define

and this function has an analytic continuation, which we’ll also denote Γ, that’s a meromorphic function on the complex plane with poles at the nonpositive integers.

Suppose γ is another function satisfying (1) – (3), and consider the function

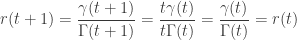

Then r is a meromorphic function with r(1) = γ(1)/Γ(1) = 1/1 = 1 and

Conversely, if r is any singly-periodic meromorphic function with period 1 and r(1) = 1, and we let γ(t) := r(t) Γ(t), then γ clearly satisfies (1) – (3). So, given that we already know about the classical Γ function, the problem of classifying every function satisfying (1) – (3) reduces to the problem of classifying these functions r.

Taking the quotient of the complex plane by the translation sending z to z+1 gives us a cylinder, so equivalently we’re looking for meromorphic functions on this cylinder. This cylinder is isomorphic (a.k.a. biholomorphic, a.k.a. conformally equivalent) to the punctured complex plane.

Unfortunately the noncompactness of the cylinder is a serious problem if we want to describe its function field. I’m not an expert here, but my understanding is that the situation looks like this:

- The holomorphic periodic functions on the cylinder are just given by Fourier series.

- The meromorphic ones with finitely many poles are just ratios of the holomorphic ones.

- The meromorphic ones with infinitely many poles, though, aren’t so easy to describe.

One way people try to deal with this situation is by putting some arbitrary analytic condition on the functions that limits exposure to the third class of functions. This is in essence what the Wielandt characterization of the Gamma function is doing.

For more on the function field of the cylinder, see:

*It’s probably worth saying a couple of things for people who aren’t familiar with the subject. These are the sorts of things that bothered me when I was first learning complex analysis and which, while sort of appearing in texts, never seem to be highlighted to the extent that they deserve.

First, notice that if we try to plug in t=0 into this functional equation, we get

1 = γ(1) = 0 γ(0),

which we cannot solve for γ(0). So what do we mean when we say that γ satisfies this functional equation? Well, we mean that it’s satisfied whenever both t and t+1 are in the domain of γ. We see in particular that t=0 cannot be in the domain of any function γ satisfying conditions (1)-(3).

This appears at first to open another can of worms, since if we’re allowed to throw points out of the domain of γ at will we could simply look at every pair of points not satisfying the functional equation and throw out one or the other of them at random.

But we’re considering a meromorphic function on the plane. Such a function is defined at every point in the plane, minus some countable discrete set. (“Countable” is redundant here — every uncountable set of points in the plane fails to be discrete.) In fact, by the Riemann removable singularities theorem, we can further insist that such a function is only undefined where it “has to be” (i.e., where the function approaches infinity in magnitude near a point). To be quite technical, we generally think not of a particular meromorphic function but rather of an equivalence classes of meromorphic functions under the relation f~g if f(z) = g(z) at every point z in the domain of both functions.

Anyway, the point is that everything’s fine — we won’t have any analytic pathologies creeping in.

. Since this ring is an integral domain, it follows that f g is not the zero element either. As this holds for all primes p, f g is primitive.